Yesterday, in a discussion with a student on how to structure their design report, I found myself constructing a little typology of three types of justification for design decisions, each with their own rhetorical structure and form of presentation.

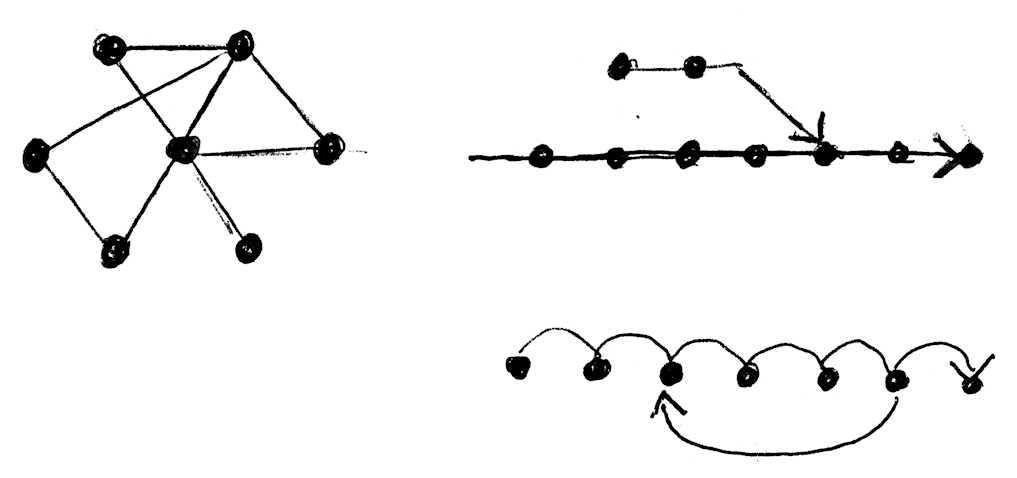

First, a particular feature of a design can be selected from alternatives developed in parallel. We do this at the overall level with concepts, usually three of them. These alternatives do not follow from the other, but are developed independent of each other, they are explorations of different approaches, and each represent a different set of trade-offs. Sometimes, these are developed in a sequence, one after the other but they are sufficiently independent of each other that they could have been developed in parallel, as three alternative answers to the same design problem, and so that each option can be evaluated using the same set of criteria. You can also do this at the level of details. Alternative ways to construct the frame, for instance, or different options for a hub assembly. In a report, you’d present these options side-by-side, with an argument for why one of them is the better choice.

Second, design features or geometries can be the endpoint of a single-track, iterative exploration or evolution. In this case you also have a number of alternatives that were considered, but they are not equivalent, and could not have been developed independently, in parallel. Instead, they form a sequence, where an evaluation of the strengths and weaknesses of each iteration forms the argument for the next one. The criteria used to get from one step to the next might differ from the considerations that led to the step after that. In a report, you can present the main stages of such an evolution, arranged chronologically, together with an explanation of the dimensions, features, or phenomena that turned out to be the most relevent, and how they shaped (and justify) the final form and properties of the part or construction.

Third, design features can also be the outcome of calculations that determine their correct or optimal value. Such design decisions may also have gone through iterations, or have been considered next to alternatives, but that history is no longer relevant for arguing the final outcome. Such decisions (a gear ratio, the length of a lever, the thickness of a beam) are best and most clearly justified by presenting a mathematical model, or formula, incorporation particular assumptions, constraints, and safety margins, leading to a single correct or optimal value.